The Equation of Trust: Weighing the Benefits and Risks of Artificial Intelligence

Imagine a world where your self-driving car takes you safely through the busiest streets, where every pedestrian is noticed, and every traffic signal respected, no matter the weather or time of day. Or picture an AI-powered recruitment system that identifies the best candidates based solely on their skills and potential, completely blind to their gender, ethnicity, or background. This is the promise of artificial intelligence—a technology that can transform our lives in ways we’ve only begun to imagine.

Yet, as with any powerful tool, AI comes with its own set of challenges. Take, for example, a healthcare AI system that misdiagnoses patients due to biased training data, leading to incorrect treatments and adverse health outcomes. Even consider an AI-driven financial algorithm that discriminates against certain demographic groups, resulting in unfair denial of loans and financial services. These stories underscore the critical need for robust regulation to ensure that AI systems operate fairly and responsibly.

A survey commissioned by Workday and conducted by FT Longitude in November and December 2023 highlighted a significant trust gap in the workplace regarding AI. The survey was conducted with 1375 business leaders and 4000 employees across 14 countries. Of those, only 62% of business leaders and 52% of employees trust AI adoption in their organizations. Key concerns include the lack of clear guidelines for AI use, insufficient skills for AI implementation, and uncertainty about the ethical deployment of AI technologies.1

To address these issues, the concept of “trusted AI” has emerged, emphasizing ethical, fair, transparent, and accountable AI development. Trusted AI aims to mitigate the potential risks of AI and unlock its full potential for sustainable development. In future, AI can help tackle global challenges like climate change and resource scarcity, but only if developed with trust and by adhering to responsible practices.

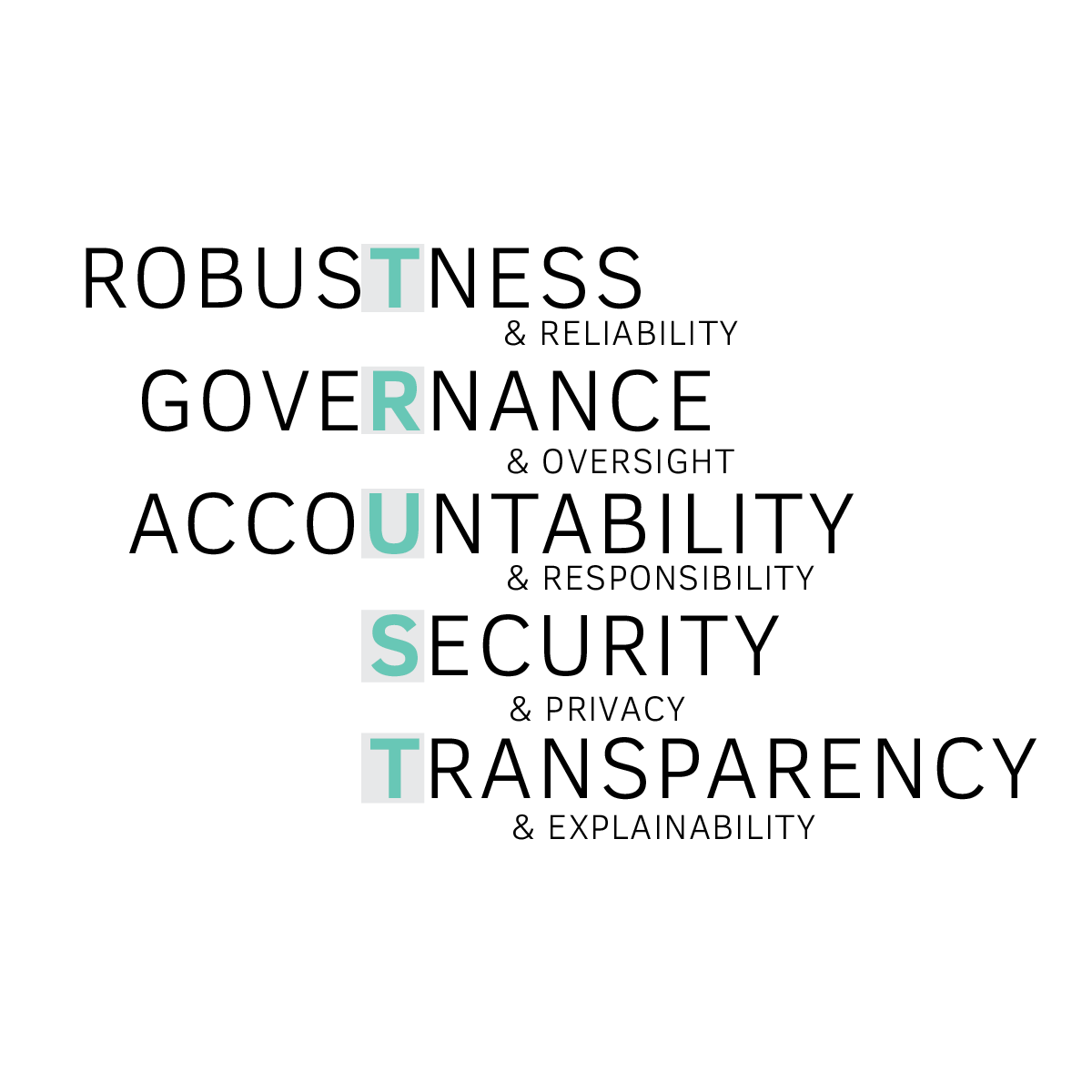

How can you promote trust in AI?

To ensure adhering to these responsible practices, five key dimensions were identified, which need to be guaranteed in order to develop and provide an AI that can be trusted.

- Robustness & Reliability: AI systems need to function correctly under various conditions, delivering consistent and dependable outcomes.

- Governance & Oversight: A governance framework should be established to oversee the ethical development and deployment of AI systems.

- Accountability & Responsibility : By defining clear roles and responsibilities for all stakeholders involved in AI systems, accountability for the outcomes can be assured.

- Security & Privacy: Data used by AI systems must be safeguarded to protect individual privacy and ensure the fairness and security of sensitive information.

- Transparency & Explainability: AI systems need to be developed in a way that they provide clear, understandable, and interpretable decisions to build trust and accountability.

Even the EU is promoting trust in AI by providing a precise regulatory framework: the EU AI Act

The European Union has taken a leading role in AI regulation with the landmark EU AI Act. This legislation aims to establish a framework for developing, deploying, and using AI systems that prioritize trust, safety, and ethical principles. The AI-Act adopts a risk-based approach, classifying AI systems into four categories:

Minimal Risk

Simple games or data filters face minimal regulatory requirements as they pose little to no risk to users or society.

Limited Risk

Systems such as chatbots and spam filters require some record-keeping but face less scrutiny.

They must maintain a level of transparency and documentation to allow for accountability and minimal risk management.

High Risk

AI systems in critical areas like infrastructure and employment screening must comply with stringent requirements before market placement.

These requirements include rigorous testing, documentation, and transparency to ensure they do not lead to significant risks to health, safety, or fundamental rights.

Unacceptable Risk

AI systems posing a clear threat to fundamental rights, safety, or security are banned. Examples include social scoring systems used by governments and real-time remote biometric identification systems.

These systems fail to meet the requirement of not infringing on fundamental human rights or posing significant security threats.

The EU AI Act marks only the beginning of the journey towards responsible AI development. Although it serves as a catalyst for positive change, setting a standard for ethical AI practices by encouraging transparency, accountability, and fairness, companies need more than just regulations to succeed. Implementing these regulations effectively requires expert guidance and support.

To ensure effective governance and compliance of AI systems, a comprehensive approach needs to be established and rigorously implemented within the company.

- Firstly, all AI systems and the key stakeholders responsible for these systems need to be identified.

- Subsequently, an AI system catalogue needs to be created, classifying each system according to its risk level, ranging from Unacceptable Risk to Low Risk.

- Based on this risk classification, appropriate measures are derived, and a compliance strategy needs to be developed to implement these measures across the organization.

- Governance structures are to be reviewed and adapted to assign clear roles and responsibilities, ensuring the organization is properly structured for continuous monitoring of both existing and new AI systems.

- Individuals tasked with AI systems responsibilities must undergo training to enhance their skills for effective monitoring and system changes.

- Additionally, awareness measures need to be implemented to educate all employees about the significance and implications of AI systems within the organization.

This holistic approach will foster a robust framework for managing AI systems, ensuring compliance and mitigating risks effectively.

Fill out the form below and get access to key facts about the EU AI Act now

The road to responsible AI is not easy, but if you start now, you can pave the way for a more trustworthy future

The EU AI Act marks a new era of AI regulation, positioning Europe as a global leader in fostering trustworthy AI. With its comprehensive framework, the AI Act ensures uniform rules for AI development, marketing, and usage across the EU, prioritizing safety and fundamental rights. Companies will be held accountable for any resulting harm from AI systems, with penalties of up to 35 million euros or 7% of turnover. Compliance is not just a legal requirement but a strategic imperative, offering competitive advantages through ethical innovation and AI.

By embracing the principles outlined in the AI Act, organizations can enhance their competitive positioning and branding, fostering trust among customers and alliance partners. As the AI Act has been formally adopted in the end of May 2024 compliance, proactive adaptation to these regulations is essential, laying the foundation for a future where responsible AI drives innovation and secures market leadership.

1 Workday Global Survey Reveals AI Trust Gap in the Workplace – Jan 10, 2024

ISO/IEC 27001:2013 certified

ISO/IEC 27001:2013 certified